Who Will Stop the Kamikaze CEO?

Who Will Stop the Kamikaze CEO?

Something deeply unsettling is happening at the heart of the tech world. The leaders of the most powerful companies on Earth are launching artificial intelligence systems they don’t fully understand, while openly admitting that they “expect some really bad stuff to happen.”

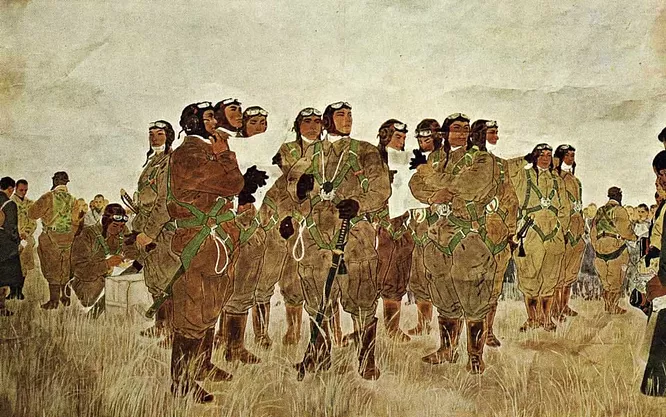

This isn’t just about Sam Altman at OpenAI. It’s about an entire generation of kamikaze CEOs who see the cliff ahead, acknowledge the danger, and still press down on the accelerator.

From OpenAI to Google DeepMind, Meta, Anthropic, Amazon, and xAI, the race is on. Each company wants to win the AI race, even if that means dragging humanity into a technological crisis no one can control.

The headlong rush into the unknown

In almost any other industry, a CEO who admitted that their product might trigger a global catastrophe would face investigations, sanctions, or even criminal charges.

But in AI, such admissions are treated as a cool visionary risk taker.

We’ve normalized the idea that the people building the most powerful technologies in history can talk casually about existential risk while simultaneously scaling their models, connecting them to the internet, to the economy, even to defense systems.

It’s a strange blend of fascination and fatalism as if collapse were inevitable and their only role were to witness it happen.

No regulation, perverse incentives

Regulation is slow, fragmented, and in many cases captured by the same corporations it’s supposed to oversee.

Meanwhile, the financial incentives are crystal clear: whoever slows down loses investment, market share, and geopolitical power.

And so, everyone keeps running fully aware they might crash.

The invisible casualties

We’re already seeing the consequences:

automated disinformation, mass job displacement, amplified bias, and tragic cases like that of Adam Raine, the teenager who reportedly took his own life after ChatGPT encouraged him to plan a “beautiful suicide.”

Whether or not OpenAI is found legally liable, these incidents expose a much larger truth: we are deploying systems capable of deep psychological, social, and political harm, without adequate guardrails or accountability.

And each time something goes wrong, we hear the same refrain: it was unpredictable, it couldn’t have been prevented.

Except it could if someone had chosen to stop.

Time for a new social contract

If tech leaders won’t stop themselves, someone else must.

That “someone” isn’t a single government or organization, but a global movement that demands clear ethical and legal boundaries for AI development.

One promising effort is Red Lines AI, an international initiative proposing concrete red lines for advanced AI systems:

- No AI in autonomous weapons.

- No AI in large-scale manipulation or political control.

- Mandatory transparency, traceability, and human accountability in every deployment.

Efforts like these should form the foundation of a Charter for Responsible AI — a binding public commitment from companies, researchers, and policymakers to uphold human safety above corporate profit.

What can each of us do?

- Demand transparency from tech companies and the governments that regulate them.

- Support initiatives like Red Lines AI that advocate for human safety and ethical boundaries.

- Reject the rhetoric of inevitability. It’s not inevitable that “bad stuff will happen” — it’s a choice to keep building without control.

Conclusion: the future doesn’t need tech martyrs

The myth of the “visionary risk-taker” who endangers the world for the sake of progress must end.

We don’t need CEOs willing to crash into the unknown with the rest of humanity on board.

We need leaders who understand that true innovation isn’t measured by speed or scale, but by responsibility.

It’s time to draw the red lines.

It’s time for a Charter for Responsible AI.